Locality-Sensitive Hashing Does Not Guarantee Privacy! Attacks on Google’s FLoC and the MinHash Hierarchy System

Authors: Florian Turati, Karel Kubicek karel.kubicek@inf.ethz.ch, Carlos Cotrini, and David Basin

Abstract: Recently proposed systems aim at achieving privacy using locality-sensitive hashing. We show how these approaches fail by presenting attacks against two such systems: Google’s FLoC proposal for privacy-preserving targeted advertising and the MinHash Hierarchy, a system for processing location trajectories in a privacy-preserving way. Our attacks refute the pre-image resistance, anonymity, and privacy guarantees claimed for these systems.

In the case of FLoC, we show how to deanonymize users using Sybil attacks and to reconstruct 10% or more of the browsing history for 30% of its users using Generative Adversarial Networks. We achieve this only analyzing the hashes used by FLoC. For MinHash, we precisely identify the location trajectory of a subset of individuals and, on average, we can limit users’ trajectory to just 10% of the possible geographic area, again using just the hashes. In addition, we refute their differential privacy claims.

- Download the paper: PDF

- Published in Proceedings on Privacy Enhancing Technologies 2023(4), 117–131

- Source code and supplementary material: GitHub (anonymized version while in review, check Florian Turati’s GitHub page)

- Florian Turati’s MSc thesis about FLoC: PDF

BibTex

@article{turati2023locality,

title={Locality-Sensitive Hashing Does Not Guarantee Privacy! Attacks on Google's FLoC and the MinHash Hierarchy System},

author={Turati, Florian and Kubicek, Karel and Cotrini, Carlos and Basin, David},

journal={Proceedings on Privacy Enhancing Technologies},

pages={117-131},

volume={2023},

number={4},

year={2023},

doi={10.56553/popets-2023-0101}

}

Locality-Sensitive Hashing not only in Google FLoC

Google proposed FLoC, a “private targeted advertising” system. On first look, it seemed as a great improvement to users’ privacy, but after a while, we realized that it leaks information where no one expected it to do so - from using Locality-Sensitive Hashing (LSH), which was never meant for privacy applications!

LSH maps similar inputs to similar outputs. It is a neat method for clustering or duplicates detection. Google used one of its flavors in detecting duplicate websites.

After a failed test on tens of millions of users Google discontinued FLoC in 2022, unlucky for our research, but definitely the correct decision for users’ privacy. Yet the reason for doing so was not the information leakage we found but rather the backlash. To that end, LSH is still applied in other privacy projects. We evaluate the information leakage of LSH in systems that use it to establish privacy - FLoC and MinHash Hierarchy systems.

FLoC

The key idea of FLoC is to take the user’s browsing history, and create a 50-bit hash (fingerprint) of it locally in the browser using LSH. This hash is then used to cluster users with similar history, assigning them a cluster ID. Only this ID is then shared with trackers and advertisers, keeping the web content still targeted, but to groups of at least k > 2000 users in cluster, providing k-anonymity.

We propose these attacks on FLoC:

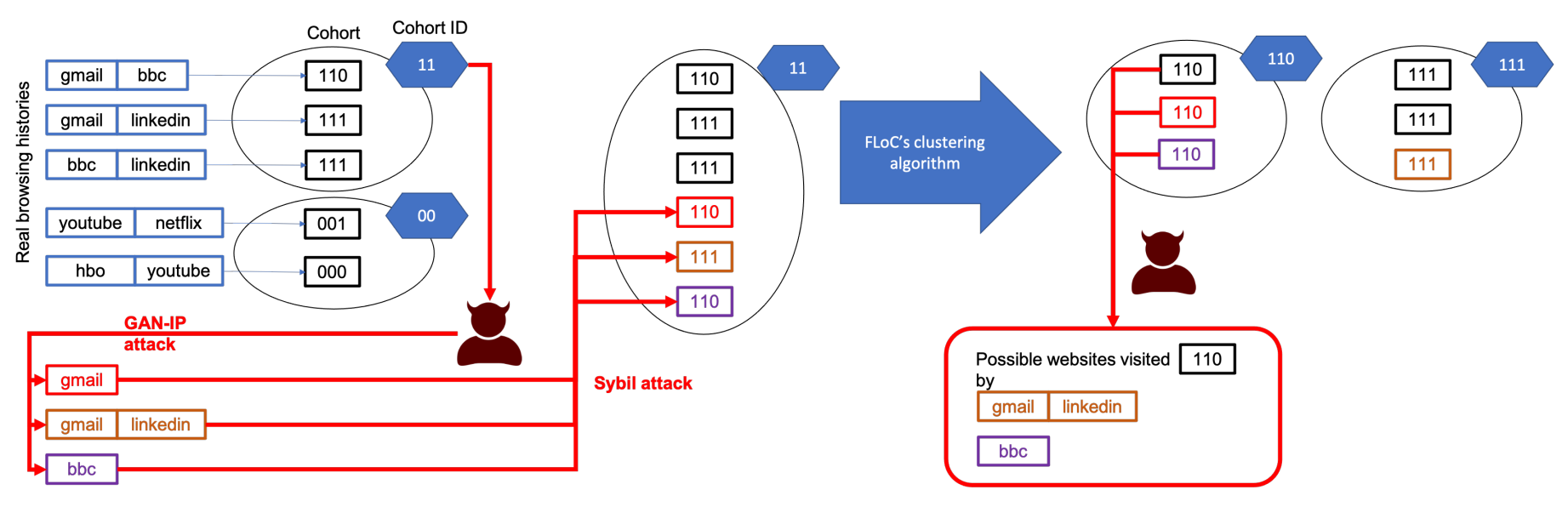

- Sybil attack: Cryptographic hash functions are resistant to finding pre-images. This is not the case for LSH. We use integer programing to find pre-images in less than a second, allowing us to generate thousands of fake users that can fill cohort and break k-anonymity.

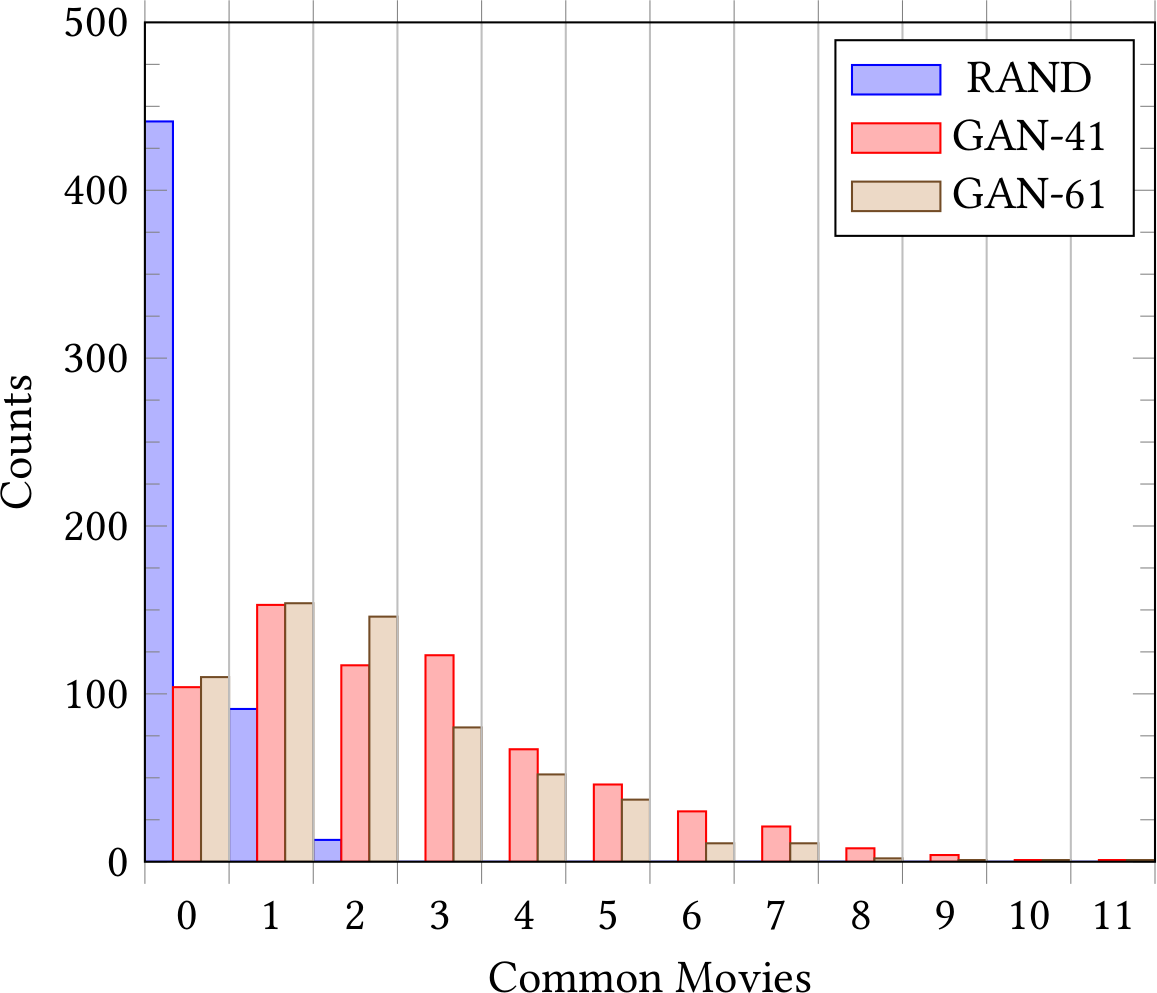

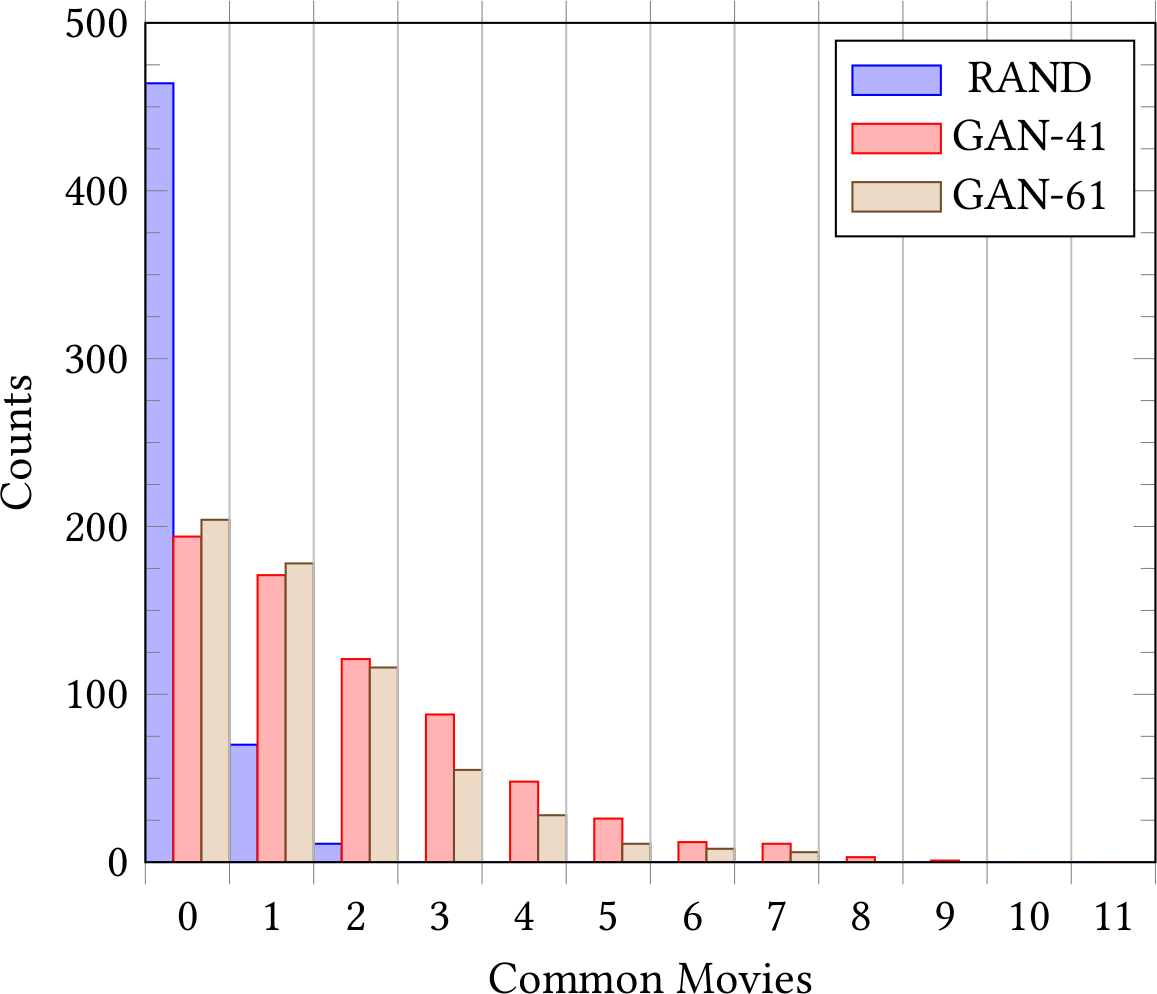

- GAN-IP attack: Using Generative Adversarial Networks (GAN), we are able to generate plausible-looking browsing histories capturing behavior patterns of real users. While this generated history does not match the SimHash of the target user, we then use the integer programming to reduce the history to match the target hash. The GAN-IP attack can reconstruct 10% or more of the history in at least 30% of the cases, breaking FLoC’s guarantees of keeping browsing histories private. We compare the attack to the baseline of random guessing of histories and find significantly more information reconstructed by our GAN-IP attack.

The scheme of the GAN-IP attack, extracting private information from FLoC. First, the attacker takes a cohort ID 𝛾 and then uses the GAN-IP attack to create fake browsing histories whose SimHash contains 𝛾 as prefix. These SimHashes make the cohort decomposable, so FLoC’s clustering algorithm divides the cohort into smaller cohorts. The attacker exploits the fake histories to infer websites visited by real users.

The scheme of the GAN-IP attack, extracting private information from FLoC. First, the attacker takes a cohort ID 𝛾 and then uses the GAN-IP attack to create fake browsing histories whose SimHash contains 𝛾 as prefix. These SimHashes make the cohort decomposable, so FLoC’s clustering algorithm divides the cohort into smaller cohorts. The attacker exploits the fake histories to infer websites visited by real users.

Distribution of overlap between target user’s 30 items history and generated histories by GAN (left) and GAN-IP (right) attacks. While the integer programming reduces the absolute number of recovered history items, the relative portion of history the attack correctly found increases from 8% to 12%. Note that for privacy reasons, we worked with public MovieLens Datasets.

Distribution of overlap between target user’s 30 items history and generated histories by GAN (left) and GAN-IP (right) attacks. While the integer programming reduces the absolute number of recovered history items, the relative portion of history the attack correctly found increases from 8% to 12%. Note that for privacy reasons, we worked with public MovieLens Datasets.

MinHash Hierarchy

MinHash Hierarchy is a system for urban planning. It collects cellular data tracking movement of people in the city to extract statistics about citizens’ behavior. It uses LSH to keep the individual’s data private, even claiming that it achieves differential privacy.

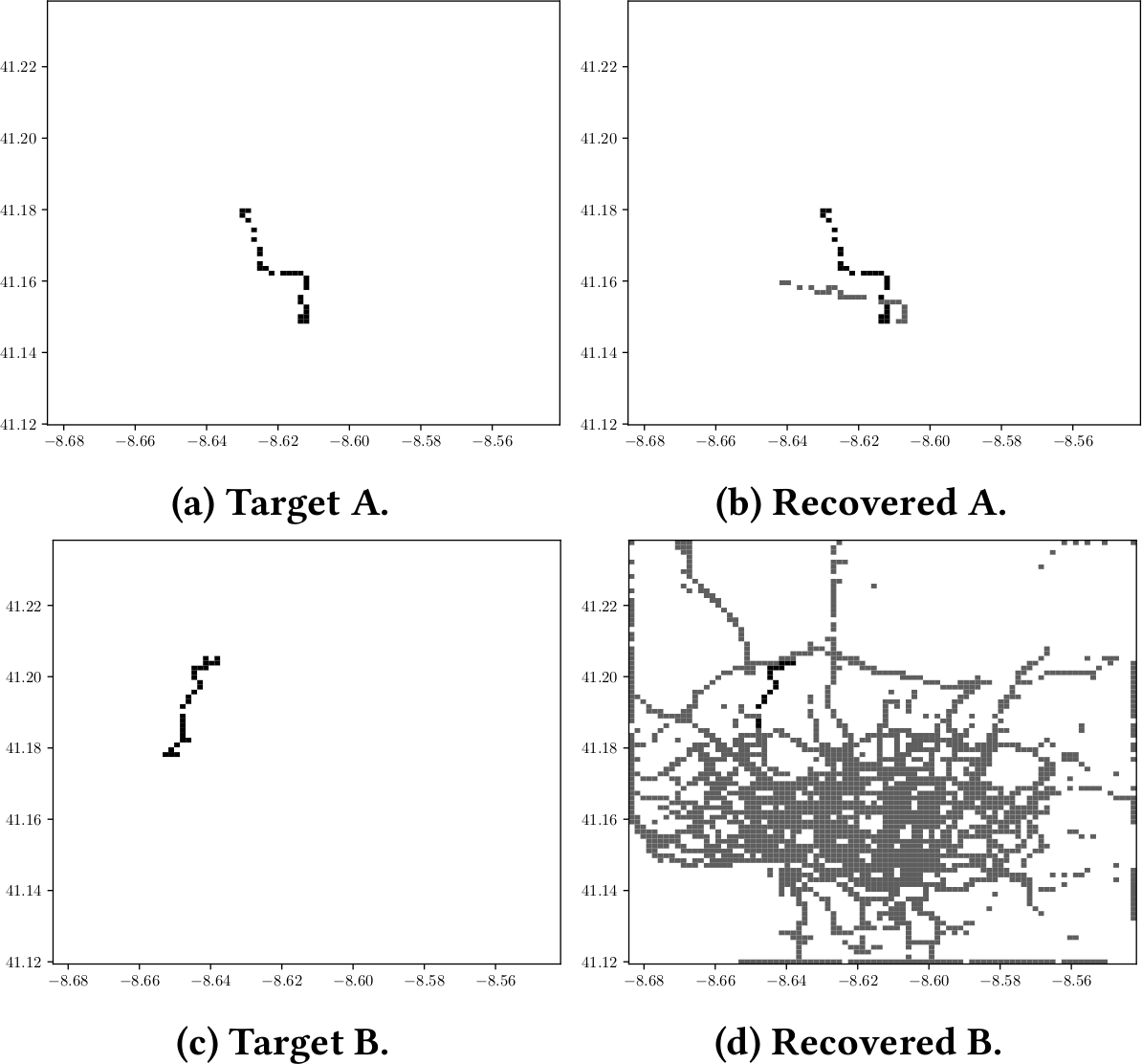

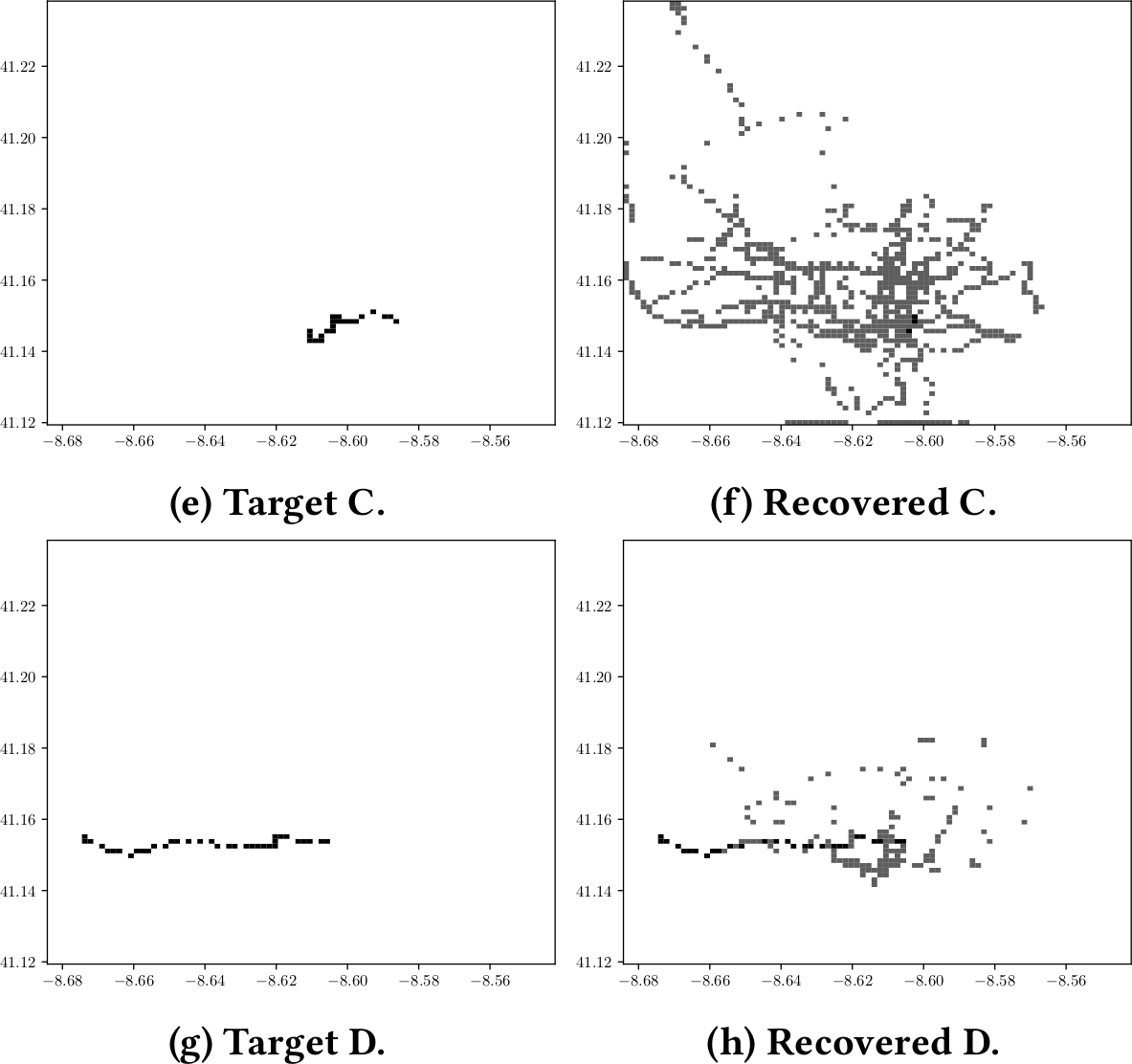

We show that the differential privacy claims are wrong, since some citizens can be completely tracked. We again evaluate the information leakage from recovering hash pre-images, and we find that in a city as Porto, we can track users down to 10% of area.

Four randomly chosen trajectories of taxi drivers in Porto denoted as “Target X,” and the extracted location “Recovered X” from MinHash Hierarchy system. Gray pixels denote where the drivers potentially drove, while black pixels are places where we are certain that the driver passed by. This uses only the LSH hash content, a further post processing on geographical distribution of timestamps would narrow this map significantly more.

Four randomly chosen trajectories of taxi drivers in Porto denoted as “Target X,” and the extracted location “Recovered X” from MinHash Hierarchy system. Gray pixels denote where the drivers potentially drove, while black pixels are places where we are certain that the driver passed by. This uses only the LSH hash content, a further post processing on geographical distribution of timestamps would narrow this map significantly more.

Conclusions

We show that using locality-sensitive hashing to establish privacy into a data-collection system is a wrong idea. While hashing is lossy compression, there must remain input leakage in the hash, else LSH would not provide any utility. Obliviousness of this leakage to system designers does not mean there is none, and it resembles security by obscurity principle.

There were other systems that use LSH to establish privacy in the system, such as Apple CSAM (discontinued after attacks were discovered) and Replacement AutoEncoder. We are therefore worried if this idea is not a reappearing idea so we use our findings to discourage any privacy system designers from its usage.

Q&A

-

Q: Are users of Chrome in danger?

A: No, Google stopped testing FLoC with Chrome v.91, and discontinued the project completely in January 2022. While we have not analyzed the successor project Topics API in as much detail as FLoC, it is at least not prone to a similar attack as we show in this work.

Acknowledgement

The authors would like to thank:

- Hung Hoang for his advice on integer programming, without him we would still be using naive heuristic by orders of magnitude slower.

- Matteo Scarlata for his valuable feedback on the draft.

- MinHash Hierarchy authors for providing us with the implementation of the MinHash signature computation in their system.

Updates

- July 11, 2023: PETS final accepted version.

- March 2, 2023: Visualization.

- February 28, 2023: Link to arXiv and BibTex.

- February 25, 2023: The initial version of this page.